Onyekachukwu Chukwuka

A Lead Engineer and Solutions Architect with deep expertise in Java, Kotlin, Scala, Python and cloud services. He designs scalable, secure systems and architects resilient solutions, blending hands-on leadership with strategic vision to solve complex challenges and drive impactful digital transformation across industries.

Article by Gigson Expert

There are days you decide you want to look good. That was me a few days ago.

It had been too long since my last haircut, so I booked my barber, set the time, and called an Uber.

Everything was smooth until we hit a wall of traffic. Ten minutes turned into twenty, and twenty felt like it might become thirty. For a moment, it felt like I might miss the appointment (which wouldn’t have been the end of the world, but still, I hate being the guy who strolls in late.)

I was already mentally drafting the apology when the driver said, “I know a faster way. Want me to take it?” Naturally, I told him, “Go ahead.”

He drove a bit further, took a left into a quieter road, followed a few winding turns, then made a longer right. After navigating his series of shortcuts, we rejoined the main road; this time near the front of the jam. Within two minutes, we were past a hold-up that would’ve taken at least ten.

I laughed and said, “Do you know what you just did?” He shook his head. “In tech, we call that caching.” He looked curious, so I kept going. “And there’s a tool named Redis that’s basically the secret map of shortcuts. So servers never have to sit in traffic again.”

Understanding Caching: The Shortcut

Modern systems rely heavily on speed. Users expect quick responses. Servers need relief from unnecessary work. Redis makes all of that possible through one simple idea:

Find the shortcut. Skip the slow route. Get there faster.

Let’s break this down simply.

The driver didn’t build a new road. He simply used a secret faster route. One that bypassed the usual slow path. And that’s exactly how caching works.

When apps or websites need to get information, they usually fetch it from a main database. That database may be large, reliable, and well-organized, but constant and repeated requests can slow things down - especially when many users are asking for the same data.

Caching steps in to fix that.

Instead of making the app repeat the same long journey every time, a quick-access copy of the data is stored in a faster location (In Memory). So when the data is needed again, the app retrieves it almost instantly.

That “faster location” is the cache.

So, What Is Redis?

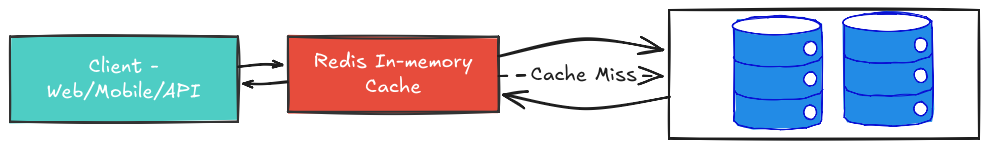

Redis is a high-speed, in-memory data store that’s commonly used as a cache.

Let’s look at it this way. If caching is taking a shortcut, Redis is the constantly updated map that already knows every back road and detour.

Here are some reasons Redis is popular and powerful:

- It’s extremely fast. Redis stores data in memory (RAM), not on disk. Memory access is far quicker, meaning responses come back in milliseconds.

- It reduces the load on your main database. If Redis answers many of the repeated requests, your database can focus on the heavier, more important tasks.

- It helps your system scale gracefully. As your users grow, Redis helps keep things smooth and responsive.

- It’s versatile. Redis can store simple values, lists, sets, counters, and even act as a message broker for queues.

Quick Note: Redis doesn’t replace your main database. It simply positions itself in front of your database. It just gives you speed.

Core Redis Features

So now that we understand the why of Redis, let’s see how Redis achieves this behind the scenes.

1. In-Memory Storage

Redis stores data directly in memory (RAM) instead of writing it to disk like traditional databases. Memory access is thousands of times faster than disk access. So fetching data from Redis feels almost instant.

When an app repeatedly requests the same data, say, a popular homepage or a product list. Redis serves that data up in milliseconds because it’s sitting right in memory.

2. Key-Value Structure

Redis is basically a giant, lightning-fast dictionary that lives in RAM. Everything is stored as a key-value pair. The key is always a string; the value can be almost anything. Here are the data types people actually use every day:

```

# Simple string (perfect for cached JSON)

GET user:1234:profile

→ "{\"name\": \"Ada\", \"plan\": \"premium\"}"

# Hashes - like a mini object

HGETALL user:1234

→ name → Ada

plan → premium

credits → 47

# Lists - for queues or recent activity

LPUSH recent: posts "post:987"

LRANGE recent: posts 0 9 # last 10 posts

# Sets - unique items, great for tags or "who liked this"

SADD post:987:likes user:12 user:45 user:89

SMEMBERS post:987:likes

# Sorted Sets - leaderboards in one command

ZINCRBY leaderboard 1 "Ada"

ZRANGE leaderboard 0 9 WITHSCORES

```Asking Redis for a key is one network hop and usually <1 ms. No table scans, no indexes, no disk seeks. Just “here’s your data.” And that’s the real reason Redis feels magical.

3. Eviction Policies

Imagine your driver can only remember 100 shortcuts at a time. One day, he discovers a new route - the 101st. To save it, he has to forget one of the older shortcuts he barely ever uses anymore. That’s exactly what Redis eviction policies do.

Redis lives in memory, and memory isn’t infinite. When the cache is about to run out of space, Redis automatically picks something to “forget” so it can store new, more useful data. It’s smart about which keys it removes.

The most common choices are:

- Least Recently Used (LRU) - evicts data that hasn’t been requested in a long time

- Least Frequently Used (LFU) - removes data that’s rarely asked for, even if it was used recently.

- Plus a few other options you can tweak that include noeviction, allkeys-lru, volatile-lru, volatile-ttl, volatile-lfu, allkeys-lfu

This keeps your cache filled with the hottest, most relevant data instead of old stuff nobody needs anymore.

One bonus: you can also set a TTL (time-to-live) on any key. It’s like telling Redis, “This shortcut is only good for the next 10 minutes - forget it after that.”.

Perfect for things like one-time login codes or trending leaderboards that change fast.

With the right eviction policy (and a sprinkle of TTLs where it makes sense), Redis stays fast, lean, and always ready with the shortcuts your app actually needs right now.

4. Replication and Clustering

As your traffic grows, Redis can replicate data across multiple nodes or servers (clustering). This ensures data availability and balances the load, so your system stays fast even when thousands of users are accessing it simultaneously.

5. Persistence and Backups

Even though Redis lives in memory, it can periodically save data to disk so you don’t lose everything if the server restarts. You get the best of both worlds - Speed from memory and safety from backups.

Redis offers two persistence options: RDB (compact periodic snapshots - fast and small) and AOF (logs every write - maximum safety).

All that remains is how our app leverages all of this to deliver a very fast and reliable system. Let’s quickly see some of the common strategies and patterns that broadly cover most use cases.

Caching Patterns

Caching strategies and patterns are essential for optimizing application performance, data consistency, and resource utilization. Below is an overview of the most widely used caching strategies, their patterns, and best practices

1. Cache-Aside (Lazy Loading) – Most common

In the cache-aside pattern, the application checks the cache for data first. If the data isn’t present (a cache miss), it fetches it from the database, stores it in the cache, and returns it to the user. This ensures only the requested data is cached and avoids unnecessary memory use. However, the first request for new data does incur additional latency.

- On miss: read from DB → write to Redis → return data

- On hit: return directly from Redis

- On update/delete in DB: invalidate or update Redis key

99% of apps start with Cache-Aside and never need anything else.

2. Write-Through

With write-through caching, any data written to the database is written to the cache at the same time. This approach maintains strong consistency between cache and database, but can add latency to write operations.

- Every write goes to both cache and DB

- Keeps cache always consistent (but slower writes)

3. Write-Behind (Write-Back)

Under this pattern, data is first written to the cache, and updates to the database occur asynchronously. This can boost write performance but increases complexity and risks data inconsistency if failures occur before data is written back to the database.

- Write to cache first, later sync to DB

- Very fast writes, but risk of data loss

4. Read-Through

Similar to cache-aside, with read-through caching, the application always reads from the cache. If the data isn’t there, the cache itself fetches the data from the underlying store and updates itself. This simplifies application logic but relies more on the cache system’s integrations.

- Application always reads from cache.

- cache misses are handled by the cache itself (supported by some clients/libraries)

5. Refresh-Ahead (Pre-fetch)

This strategy proactively refreshes cache entries before they expire, preventing cache misses for frequently accessed items and reducing the risk of “cache stampede” (when many requests simultaneously miss the cache and hit the backend).

Real Use Cases

- Caching popular pages or API responses

- Storing login sessions

- Counting likes, views, votes, etc.

- Managing queues and background tasks

Any place where speed, repetition, and efficiency matter, Redis fits right in.

Final Thoughts

When properly implemented, the right caching strategy, combined with consistent monitoring and adherence to key best practices, can deliver significant gains in performance, scalability, and user experience.

Also, setting up Redis is straightforward:

- Launch a Redis server

- Can be a cloud instance (eg, Railway, Upstash, AWS ElastiCache or Redis Cloud) or

- On-prem server

- Connect to it through a client library, and

- Configure your caching patterns and eviction policies based on your application’s requirements.

Redis instances also provide built-in tools to help you monitor performance and fine-tune results.

Choosing the right caching strategy depends on your application’s specific needs. Cache-aside is ideal for general use, write-through or write-behind suits systems that prioritize consistency or handle high write volumes, and refresh-ahead excels in latency-sensitive, read-heavy workloads.

Ultimately, Redis doesn’t change your application’s goals; it simply helps you reach them faster. Just as the driver found a quicker route to the same destination, Redis helps your applications bypass bottlenecks and arrive at their results with greater efficiency.

Next step: spin up a free Redis instance and try caching your slowest API endpoint. You’ll be shocked at how easy it is.

.webp)